Is coding in Rust as bad as in C++?

A practical comparison of build and test speed between C++ and Rust.

Written by strager on

Update (): bjorn3 found an issue with my Cranelift benchmarks.

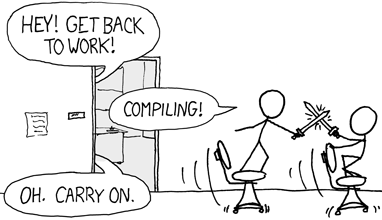

C++ is notorious for its slow build times. “My code's compiling” is a meme in the programming world, and C++ keeps this joke alive.

Projects like Google Chromium take an hour to build on brand new hardware and 6 hours to build on older hardware. There are tons of documented tweaks to make builds faster, and error-prone shortcuts to compile less stuff. Even with thousands of dollars of cloud computational power, Chromium build times are still on the order of half a dozen minutes. This is completely unacceptable to me. How can people work like this every day?

I've heard the same thing about Rust: build times are a huge problem. But is it really a problem in Rust, or is this anti-Rust propaganda? How does it compare to C++'s build time problem?

I deeply care about build speed and runtime performance. Fast build-test cycles make me a productive, happy programmer, and I bend over backwards to make my software fast so my customers are happy too. So I decided to see for myself whether Rust build times were as bad as they claim. Here is the plan:

- Find an open source C++ project.

- Isolate part of the project into its own mini project.

- Rewrite the C++ code line-by-line into Rust.

- Optimize the build for both the C++ project and the Rust project.

- Compare compile+test times between the two projects.

My hypotheses (educated guesses, not conclusions):

-

The Rust port will have slightly fewer lines of code than the C++ version.

Most functions and methods need to be declared twice in C++ (one in the header, and one in the implementation file). This isn't needed in Rust, reducing the line count.

-

For full builds, C++ will take longer to compile than Rust (i.e. Rust wins).

This is because of C++'s

#includefeature and C++ templates, which need to be compiled once per .cpp file. This compilation is done in parallel, but parallelism is imperfect. -

For incremental builds, Rust will take longer to compile than C++ (i.e. C++ wins).

This is because Rust compiles one crate at a time, rather than one file at a time like in C++, so Rust has to look at more code after each small change.

What do you think? I polled my audience to get their opinion:

42% of people think that C++ will win the race.

35% of people agree with me that “it depends™”.

And 17% of people think Rust will prove us all wrong.

Check out the optimizing Rust build times section if just want to make your Rust project build faster.

Check out the C++ vs Rust build times section if you just want the C++ vs Rust comparisons.

Let's get started!

Making the C++ and Rust test subjects

Finding a project

If I'm going to spend a month rewriting code, what code should I port? I decided on a few criteria:

- Few or no third-party dependencies. (Standard library is okay.)

- Works on Linux and macOS. (I don't care much about build times on Windows.)

- Extensive test suite. (Without one, I wouldn't know if my Rust code was correct.)

- A little bit of everything: FFI; pointers; standard and custom containers; utility classes and functions; I/O; concurrency; generics; macros; SIMD; inheritance

The choice is easy: port the project I've been working on for the past

couple of years! I'll port the JavaScript lexer in the

quick-lint-js project.

Trimming the C++ code

The C++ portion of quick-lint-js contains over 100k SLOC. I'm not going to port that much code to Rust; that would take me half a year! Let's instead focus on just the JavaScript lexer. This pulls in other parts of the project:

- Diagnostic system

- Translation system (used for diagnostics)

- Various memory allocators and containers (e.g. bump allocator; SIMD-friendly string)

- Various utility functions (e.g. UTF-8 decoder; SIMD intrinsic wrappers)

- Test helper code (e.g. custom assertion macros)

- C API

Unfortunately, this subset doesn't include any concurrency or I/O. This

means I can't test the compile time overhead of Rust's

async/await. But that's a small part of

quick-lint-js, so I'm not too concerned.

I'll start the project by copying all the C++ code, then deleting code I knew was not relevant to the lexer, such as the parser and LSP server. I actually ended up deleting too much code and had to add some back in. I kept trimming and trimming until I couldn't trim no more. Throughout the process, I kept the C++ tests passing.

After stripping the quick-lint-js code down to the lexer (and everything the lexer needs), I end up with about 17k SLOC of C++:

| C++ SLOC | |

|---|---|

| src | 9.3k |

| test | 7.3k |

| subtotal | 16.6k |

| dep: Google Test | 69.7k |

The rewrite

How am I going to rewrite thousands of lines of messy C++ code? One file at a time. Here's the process:

- Find a good module to convert.

- Copy-paste the code and tests, search-replace to fix some syntax, then keep running cargo test until the build and tests pass.

- If it turns out I needed another module first, go to step 2 for that needed module, then come back to this module.

- If I'm not done converting everything, go to step 1.

There is one major difference between the Rust and C++ projects which

might affect build times. In C++, the diagnostics system is implemented

with a lot of code generation, macros, and constexpr. In

the Rust port, I use code generation, proc macros, normal macros, and a

dash of const. I have heard claims that proc macros are

slow, and other claims that proc macros are only slow because they're

usually poorly written. I hope I did a good job with my proc macros. 🤞

The Rust project turns out to be slightly larger than the C++ project: 17.1k SLOC of Rust compared to 16.6k SLOC of C++:

| C++ SLOC | Rust SLOC | C++ vs Rust SLOC | |

|---|---|---|---|

| src | 9.3k | 9.5k | +0.2k (+1.6%) |

| test | 7.3k | 7.6k | +0.3k (+4.3%) |

| subtotal | 16.6k | 17.1k | +0.4k (+2.7%) |

| dep: Google Test | 69.7k | ||

| dep: autocfg | 0.6k | ||

| dep: lazy_static | 0.4k | ||

| dep: libc | 88.6k | ||

| dep: memoffset | 0.6k |

Optimizing the Rust build

I care a lot about build times. Therefore, I had already optimized build times for the C++ project (before trimming it down). I need to put in a similar amount of effort into optimizing build times for the Rust project.

Let's try these things which might improve Rust build times:

- Faster linker

- Cranelift backend

- Compiler and linker flags

- Different workspace and test layouts

- Minimize dependency features

- cargo-nextest

- Custom-built toolchain with PGO

Faster linker

My first step is to profile the build. Let's first profile using the

-Zself-profile rustc flag. In my project, this flag outputs two different files. In one of the

files, the run_linker phase stands out:

| Item | Self time | % of total time |

|---|---|---|

| run_linker | 129.20ms | 60.326 |

| LLVM_module_codegen_emit_obj | 23.58ms | 11.009 |

| LLVM_passes | 13.63ms | 6.365 |

In the past, I successfully improved C++ build times by switching to the

Mold linker. Let's try it

with my Rust project:

Shame; the improvement, if any, is barely noticeable.

That was Linux. macOS also has alternatives to the default linker: lld

and zld. Let's try those:

On macOS, I also see little to no improvement by switching away from the default linker. I suspect that the default linkers on Linux and macOS are doing a good enough job with my small project. The optimized linkers (Mold, lld, zld) shine for big projects.

Cranelift backend

Let's look at the

-Zself-profile

profiles again. In another file, the

LLVM_module_codegen_emit_obj and

LLVM_passes phases stood out:

| Item | Self time | % of total time |

|---|---|---|

| LLVM_module_codegen_emit_obj | 171.83ms | 24.274 |

| typeck | 57.50ms | 8.123 |

| eval_to_allocation_raw | 54.56ms | 7.708 |

| LLVM_passes | 50.03ms | 7.068 |

| codegen_module | 40.58ms | 5.733 |

| mir_borrowck | 36.94ms | 5.218 |

I heard talk about alternative rustc backends to LLVM, namely Cranelift. If I build with the rustc Cranelift backend, -Zself-profile looks promising:

| Item | Self time | % of total time |

|---|---|---|

| define function | 69.21ms | 12.307 |

| typeck | 57.94ms | 10.303 |

| eval_to_allocation_raw | 55.77ms | 9.917 |

| mir_borrowck | 37.44ms | 6.657 |

Unfortunately, actual build times are worse with Cranelift than with

LLVM:

Compiler and linker flags

Compilers have a bunch of knobs to speed up builds (or slow them down). Let's try a bunch:

- -Zshare-generics=y (rustc) (Nightly only)

- -Clink-args=-Wl,-s (rustc)

- debug = false (Cargo)

- debug-assertions = false (Cargo)

- incremental = true and incremental = false (Cargo)

- overflow-checks = false (Cargo)

- panic = 'abort' (Cargo)

- lib.doctest = false (Cargo)

- lib.test = false (Cargo)

Note: quick, -Zshare-generics=y is the same as quick, incremental=true but with the -Zshare-generics=y flag enabled. Other bars exclude -Zshare-generics=y because that flag is not stable (thus requires the nightly Rust compiler).

Most of these knobs are documented elsewhere, but I haven't seen anyone mention linking with -s. -s strips debug info, including debug info from the statically-linked Rust standard library. This means the linker needs to do less work, reducing link times.

Workspace and test layouts

Rust and Cargo have some flexibility in how you place your files on disk. For this project, there are three reasonable layouts:

- Cargo.toml

-

src/

- lib.rs

-

fe/

- mod.rs

- [...].rs

-

i18n/

- mod.rs

- [...].rs

-

test/ (test helpers)

- mod.rs

- [...].rs

-

util/

- mod.rs

- [...].rs

- Cargo.toml

-

src/

- lib.rs

-

fe/

- mod.rs

- [...].rs

-

i18n/

- mod.rs

- [...].rs

-

util/

- mod.rs

- [...].rs

-

libs/

-

test/ (test helpers)

- Cargo.toml

-

src/

- lib.rs

- [...].rs

-

test/ (test helpers)

- Cargo.toml

-

libs/

-

fe/

- Cargo.toml

-

src/

- lib.rs

- [...].rs

-

i18n/

- Cargo.toml

-

src/

- lib.rs

- [...].rs

-

test/ (test helpers)

- Cargo.toml

-

src/

- lib.rs

- [...].rs

-

util/

- Cargo.toml

-

src/

- lib.rs

- [...].rs

-

fe/

In theory, if you split your code into multiple crates, Cargo can parallelize rustc invocations. Because I have a 32-thread CPU on my Linux machine, and a 10-thread CPU on my macOS machine, I expect unlocking parallelization to reduce build times.

For a given crate, there are also multiple places for your tests in a Rust project:

- Cargo.toml

-

src/

- a.rs

- b.rs

- c.rs

- lib.rs

-

tests/

- test_a.rs

- test_b.rs

- test_c.rs

- Cargo.toml

-

src/

- a.rs

- b.rs

- c.rs

- lib.rs

-

tests/

- test.rs

-

t/

- mod.rs

- test_a.rs

- test_b.rs

- test_c.rs

- Cargo.toml

-

src/

- a.rs

- b.rs

- c.rs

- lib.rs

- test_a.rs

- test_b.rs

- test_c.rs

- Cargo.toml

-

src/

- a.rs (+tests)

- b.rs (+tests)

- c.rs (+tests)

- lib.rs

Because of dependency cycles, I couldn't benchmark the

tests inside src files layout. But I did benchmark the other

layouts in some combinations:

The workspace configurations (with either separate test executables (many test exes) or one merged test executable (1 test exes)) seems to be the all-around winner. Let's stick with the workspace; many test exes configuration from here onward.

Minimize dependency features

Many crates support optional features. Sometimes, optional features are

enabled by default. Let's see what features are enabled using the

cargo tree command:

$ cargo tree --edges features

cpp_vs_rust v0.1.0

├── cpp_vs_rust_proc feature "default"

│ └── cpp_vs_rust_proc v0.1.0 (proc-macro)

├── lazy_static feature "default"

│ └── lazy_static v1.4.0

└── libc feature "default"

├── libc v0.2.138

└── libc feature "std"

└── libc v0.2.138

[dev-dependencies]

└── memoffset feature "default"

└── memoffset v0.7.1

[build-dependencies]

└── autocfg feature "default"

└── autocfg v1.1.0

The libc crate has a feature called std. Let's

turn it off, test it, and see if build times improve:

[dependencies]

libc = { version = "0.2.138", default-features = false }

libc = { version = "0.2.138" }

Build times aren't any better. Maybe the std feature

doesn't actually do anything meaningful? Oh well. On to the next tweak.

cargo-nextest

cargo-nextest is a tool which claims to

be “up to 60% faster than cargo test.”. My Rust code base is

44% tests, so maybe cargo-nextest is just what I need. Let's try it and

compare build+test times:

On my Linux machine, cargo-nextest either doesn't help or makes things

worse. The output does look pretty, though...

PASS [ 0.002s] cpp_vs_rust::test_locale no_match

PASS [ 0.002s] cpp_vs_rust::test_offset_of fields_have_different_offsets

PASS [ 0.002s] cpp_vs_rust::test_offset_of matches_memoffset_for_primitive_fields

PASS [ 0.002s] cpp_vs_rust::test_padded_string as_slice_excludes_padding_bytes

PASS [ 0.002s] cpp_vs_rust::test_offset_of matches_memoffset_for_reference_fields

PASS [ 0.004s] cpp_vs_rust::test_linked_vector push_seven

How about on macOS?

cargo-nextest does slightly speed up builds+tests on my MacBook Pro. I wonder why speedup is OS-dependent. Perhaps it's actually hardware-dependent?

From here on, on macOS I will use cargo-nextest, but on Linux I will not.

Custom-built toolchain with PGO

For C++ builds, I found that building the compiler myself with

profile-guided optimizations (PGO, also known as

FDO) gave

significant performance wins. Let's try PGO with the Rust toolchain.

Let's also try

LLVM BOLT

to further optimize rustc. And -Ctarget-cpu=native as well.

Compared to C++ compilers, it looks like the Rust toolchain published via rustup is already well-optimized. PGO+BOLT gave us less than a 10% performance boost. But a perf win is a perf win, so let's use this faster toolchain in the fight versus C++.

When I first tried building a custom Rust toolchain, it was slower than

Nightly by about 2%. I struggled for days to at least reach parity,

tweaking all sorts of knobs in the Rust config.toml, and

cross-checking Rust's CI build scripts with my own. As I was putting the

finishing touches on this article, I decided to

rustup update, git pull, and re-build the

toolchain from scratch. Then my custom toolchain was faster! I guess

this was what I needed; perhaps I was accidentally on the wrong commit

in the Rust repo. 🤷♀️

Optimizing the C++ build

When working on the original C++ project, quick-lint-js, I already

optimized build times using common techniques, such as using

PCH, disabling exceptions and

RTTI, tweaking build

flags, removing unnecessary #includes, moving code out of

headers, and externing template instantiations. But there

are several C++ compilers and linkers to choose from. Let's compare them

and choose the best before I compare C++ with Rust:

On Linux, GCC is a clear outlier. Clang fares much better. My

custom-built Clang (which is built with PGO and BOLT, like my custom

Rust toolchain) really improves build times compared to Ubuntu's Clang.

libstdc++ builds slightly faster on average than libc++. Let's use my

custom Clang with libstdc++ in my C++ vs Rust comparison.

On macOS, the Clang toolchain which comes with Xcode seems to be better-optimized than the Clang toolchain from LLVM's website. I'll use the Xcode Clang for my C++ vs Rust comparison.

C++20 modules

My C++ code uses #include. But what about

import introduced in C++20? Aren't C++20 modules supposed

to make compilation super fast?

I tried to use C++20 modules for this project. As of , CMake support for modules on Linux is so experimental that even 'hello world' doesn't work.

Maybe 2023 will be the year of C++20 modules. As someone who cares a lot

about build times, I really hope so! But for now, I will pit Rust

against classic C++ #includes.

C++ vs Rust build times

I ported the C++ project to Rust and optimized the Rust build times as much as I could. Which one compiles faster: C++ or Rust?

Unfortunately, the answer is: it depends!

On my Linux machine, Rust builds are sometimes faster than C++ builds,

but sometimes slower or the same speed. In the

incremental lex benchmark, which modifies the largest src file,

Clang was faster than rustc. But for the other incremental benchmarks,

rustc came out on top.

On my macOS machine, however, the story is very different. C++ builds are usually much faster than Rust builds. In the incremental test-utf-8 benchmark, which modifies a medium-sized test file, rustc compiled slightly faster than Clang. But for the other incremental benchmarks, and for the full build benchmark, Clang clearly came out on top.

Scaling beyond 17k SLOC

I benchmarked a 17k SLOC project. But that was a small project. How do build times compare for a larger project of, say, 100k SLOC or more?

To test how well the C++ and Rust compilers scale, I took the biggest module (the lexer) and copy-pasted its code and tests, making 8, 16, and 24 copies.

Because my benchmarks also include the time it takes to run tests, I expect times to increase linearly, even with instant build times.

| C++ SLOC | Rust SLOC | |||

|---|---|---|---|---|

| 1x | 16.6k | 17.1k | ||

| 8x | 52.3k | (+215%) | 43.7k | (+156%) |

| 16x | 93.1k | (+460%) | 74.0k | (+334%) |

| 24x | 133.8k | (+705%) | 104.4k | (+512%) |

Both Rust and Clang scaled linearly, which is good to see.

For C++, changing a header file (incremental diag-types) lead to the biggest change in build time, as expected. Build time scaled with a low factor for the other incremental benchmarks, mostly thanks to the Mold linker.

I am disappointed with how poorly Rust's build scales, even with the incremental test-utf-8 benchmark which shouldn't be affected that much by adding unrelated files. This test uses the workspace; many test exes crate layout, which means test-utf-8 should get its own executable which should compile independently.

Conclusion

Are compilation times a problem with Rust? Yes. There are some tips and tricks to speed up builds, but I didn't find the magical order-of-magnitude improvements which would make me happy developing in Rust.

Are build times as bad with Rust as with C++? Yes. And for bigger projects, development compile times are worse with Rust than with C++, at least with my code style.

Looking at my hypotheses, I was wrong on all counts:

- The Rust port had more lines than the C++ version, not fewer.

- For full builds, compared to Rust, C++ builds took about the same amount of time (17k SLOC) or took less time (100k+ SLOC), not longer.

- For incremental builds, compared to C++, Rust builds were sometimes shorter and sometimes longer (17k SLOC) or much longer (100k+ SLOC), not always longer.

Am I sad? Yes. During the porting process, I have learned to like some aspects of Rust. For example, proc macros would let me replace three different code generators, simplifying the build pipeline and making life easier for new contributors. I don't miss header files at all. And I appreciate Rust's tooling (especially Cargo, rustup, and miri).

I decided to not port the rest of quick-lint-js to Rust. But... if build times improve significantly, I will change my mind! (Unless I become enchanted by Zig first.)

Appendix

Source code

Source code for the trimmed C++ project, the Rust port (including different project layouts), code generation scripts, and benchmarking scripts. GPL-3.0-or-later.Linux machine

- name

- strapurp

- CPU

- AMD Ryzen 9 5950X (PBO; stock clocks) (32 threads) (x86_64)

- RAM

- G.SKILL F4-4000C19-16GTZR 2x16 GiB (overclocked to 3800 MT/s)

- OS

- Linux Mint 21.1

- Kernel

- Linux strapurp 5.15.0-56-generic #62-Ubuntu SMP Tue Nov 22 19:54:14 UTC 2022 x86_64 x86_64 x86_64 GNU/Linux

- Linux performance governor

- schedutil

- CMake

- version 3.19.1

- Ninja

- version 1.10.2

- GCC

- version 12.1.0-2ubuntu1~22.04

- Clang (Ubuntu)

- version 14.0.0-1ubuntu1

- Clang (custom)

- version 15.0.6 (Rust fork; commit 3dfd4d93fa013e1c0578d3ceac5c8f4ebba4b6ec)

- libstdc++ for Clang

- version 11.3.0-1ubuntu1~22.04

- Rust Stable

- 1.66.0 (69f9c33d7 2022-12-12)

- Rust Nightly

- version 1.68.0-nightly (c7572670a 2023-01-03)

- Rust (custom)

- version 1.68.0-dev (c7572670a 2023-01-03)

- Mold

- version 0.9.3 (ec3319b37f653dccfa4d1a859a5c687565ab722d)

- binutils

- version 2.38

macOS machine

- name

- strammer

- CPU

- Apple M1 Max (10 threads) (AArch64)

- RAM

- Apple 64 GiB

- OS

- macOS Monterey 12.6

- CMake

- version 3.19.1

- Ninja

- version 1.10.2

- Xcode Clang

- Apple clang version 14.0.0 (clang-1400.0.29.202) (Xcode 14.2)

- Clang 15

- version 15.0.6 (LLVM.org website)

- Rust Stable

- 1.66.0 (69f9c33d7 2022-12-12)

- Rust Nightly

- version 1.68.0-nightly (c7572670a 2023-01-03)

- Rust (custom)

- version 1.68.0-dev (c7572670a 2023-01-03)

- lld

- version 15.0.6

- zld

- commit d50a975a5fe6576ba0fd2863897c6d016eaeac41

Benchmarks

- build+test w/ deps

-

C++:

cmake -S build -B . -G Ninja && ninja -C build quick-lint-js-test && build/test/quick-lint-js-testtimed -

Rust:

cargo fetchuntimed, thencargo testtimed - build+test w/o deps

-

C++:

cmake -S build -B . -G Ninja && ninja -C build gmock gmock_main gtestuntimed, thenninja -C build quick-lint-js-test && build/test/quick-lint-js-testtimed -

Rust:

cargo build --package lazy_static --package libc --package memoffset"untimed, thencargo testtimed - incremental diag-types

-

C++: build+test untimed, then modify

diagnostic-types.h, thenninja -C build quick-lint-js-test && build/test/quick-lint-js-test -

Rust: build+test untimed, then modify

diagnostic_types.rs, thencargo test - incremental lex

- Like incremental diag-types, but with lex.cpp/lex.rs

- incremental test-utf-8

- Like incremental diag-types, but with test-utf-8.cpp/test_utf_8.rs

For each executed benchmark, 12 samples were taken. The first two were discarded. Bars show the average of the last 10 samples. Error bars show the minimum and maximum sample.